Okay, let’s talk about this James Gibson model thing. So, I was messing around with some ideas the other day, trying to figure out how people actually “see” things, not just with their eyes, but, you know, understand what they’re looking at.

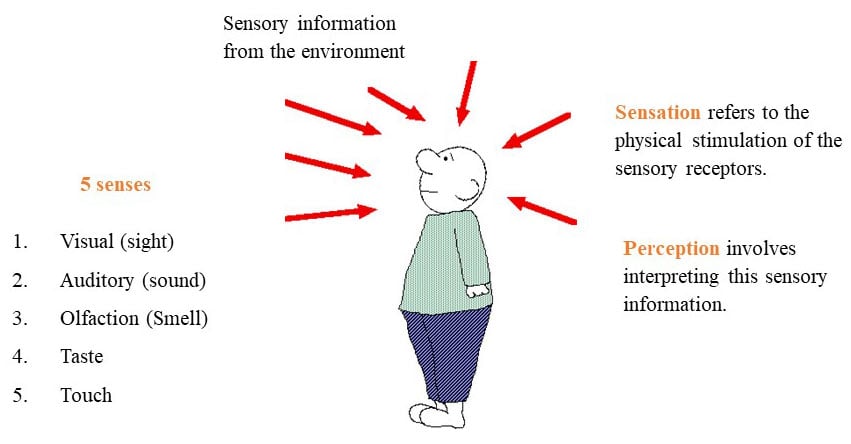

First thing I did was dive into what Gibson himself said. I read up on his whole deal about “affordances.” Basically, he was saying the world tells you what you can do with it. A chair “affords” sitting. A doorknob “affords” turning. Simple enough, right?

Then, I thought, “How can I use this?” I wanted to build something, not just read about it. I decided to focus on a really basic interaction: picking up an object.

So, I grabbed a bunch of random stuff: a mug, a book, a pen, a rubber duck (don’t judge!). I started looking at each one, thinking about what Gibson would say. The mug? It’s got a handle, so it “affords” grabbing and lifting. The book? Flat surfaces, so it “affords” stacking and reading. You get the idea.

Next step: I tried to break it down even further. What visual cues tell you it’s grabbable? For the mug, it’s the size of the handle relative to my hand, the way the light reflects off the curved surface, even the texture (smooth means less friction, easier to grip). I wrote all this stuff down.

Here’s where it got a little messy. I realized just seeing those cues wasn’t enough. My experience with mugs also plays a part. I know mugs are usually filled with hot liquid, so I automatically adjust my grip and lifting speed. That’s not just perception, it’s learned behavior influencing perception.

To capture this “experience” part, I started thinking about context. Where is the mug? Is it on a table? Is it empty or full? Is it near a coffee machine? Each of those factors changes how I interact with it. If it’s near a coffee machine, I might reach for it without even thinking, assuming it’s for coffee. That’s affordance plus expectation.

Then, I tried to create a simple “perception” system. I sketched out a really basic flowchart. Input: visual data (shape, size, color, texture). Processing: identify potential affordances based on known object properties. Output: suggested actions (grab, lift, pour). Super simplified, obviously.

I wanted to build a quick prototype. I used a really simple javascript game engine to simulate a hand picking up objects. All i did was set up an array of objects and set them up to react based on what the cursor did to them. It was clunky, but it showed how you could create a system that recognizes basic affordances. If the mouse cursor got over a mug, a little “grab” icon appeared.

The results? It was a very basic example, but it hammered home how important affordances are. Even with a super simple system, you could create interactions that felt natural and intuitive.

Finally, I thought about what I learned. The James Gibson model isn’t just some abstract theory. It’s a practical way to think about how we interact with the world. By understanding affordances, we can design things that are easier to use and more enjoyable.

Next steps? I wanna expand this prototype to include more complex interactions and incorporate machine learning to allow the system to “learn” new affordances based on experience. I also want to look into how different people perceive affordances. Maybe someone with arthritis would perceive the affordances of a mug differently than someone without it.

It’s a work in progress, but it’s been a fun and insightful journey so far.